The ship sailed when I was moved to rant about cable news, but I originally had some idea that Sideband #32 should be another rumination on bits and binary (like Sidebands #25 and #28). After all, 32-bit systems are the common currency these days, and 32 bits jumps you from the toy computer world to the real computer world. Unicode, for example, although it is not technically a “32-bit standard,” fits most naturally in a 32-bit architecture.

The ship sailed when I was moved to rant about cable news, but I originally had some idea that Sideband #32 should be another rumination on bits and binary (like Sidebands #25 and #28). After all, 32-bit systems are the common currency these days, and 32 bits jumps you from the toy computer world to the real computer world. Unicode, for example, although it is not technically a “32-bit standard,” fits most naturally in a 32-bit architecture.

When you go from 16-bit systems to 32-bit systems, your counting ability leaps from 64 K (65,536 to be precise) to 4 gig (full precision version: 4,294,967,296). This is what makes 16-bit systems “toys” (although some are plenty sophisticated). Numbers no bigger than 65 thousand (half that if you want plus and minus numbers) just don’t cut very far.

But 32 bits gives you over four-billion integers, or it can produce floating point with a about nine digits of precision and more than two hundred orders of magnitude. Specifically, you can count from the teeny tiny 1.40129846-45 to the enormously huge 3.40282347+38 (writing those out in full would involve lots of zeros!).

Which is definitely not toy computing! However, with 64-bit systems becoming the common currency, the lowly 32-bit system begins to seem almost quaint and very last millennium. (You might think 64 bits gives you just twice what 32 bits gives you, but you’d be wrong—don’t forget that big jump from 16 to 32! Each bit you add gives you twice what you had, so adding 32 more of them doubles the count 32 times! As I’ve discussed before, it lets you count to the giga-giga-numbers!)

[There’s a great parable about what happens when you double something (like a grain of rice) 64 times. I’ll Sideband that parable another time; let’s just say you end up with a lot of rice; an astonishing amount, seriously.]

Anyway, the 32-bit computer ship is all but sailed, and Sideband #32 was on a whole other frequency anyway, so that about does it for 32-bit discussions.

Instead, this post is a two-bit discussion (which makes it sound cheap, but I assure you it’s worth every penny you paid). This post is about the binary system, why we use bits (rather than nice juicy digits) and why we start counting at zero.

Today’s first question is, “Why binary?” Why do we use this two-bit system?

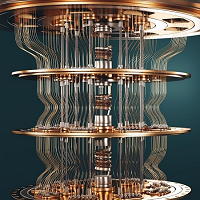

The answer is quite simple: it’s much easier for a computing machine to discriminate between only two choices than between ten. In a computer, a single value is either a zero or a one. It’s either nothing or something. And that makes it easy to tell the difference even in the worst-case circumstances.

The answer is quite simple: it’s much easier for a computing machine to discriminate between only two choices than between ten. In a computer, a single value is either a zero or a one. It’s either nothing or something. And that makes it easy to tell the difference even in the worst-case circumstances.

Think about someone standing on a distant hill during a foggy night. They have a flashlight that lets them signal you. If it only matters whether the light is on or off, the signal is easy to read. Now imagine a flashlight with a dimmer that allows multiple levels of brightness. How hard is the signal to read if you have to determine exactly how bright the light is? It would be difficult on a clear night, let alone a foggy one.

This is the difference between a binary (two-bit) system and our standard way of counting, decimal. For us humans, each value (digit) can be one of ten things; the flashlight can be off (zero, 0) or it can be one of nine levels of brightness (1-9).

A decimal number such as 1967 is like four flashlights in a row. The one on the left has the brightness level “1” (pretty dim) and the next one in line has the full brightness level (“9”). Then comes one set to “6” and one set to “7”. Imagine trying to read that number in flashlights on a distant hill. In the fog. With dirty glasses.

A decimal number such as 1967 is like four flashlights in a row. The one on the left has the brightness level “1” (pretty dim) and the next one in line has the full brightness level (“9”). Then comes one set to “6” and one set to “7”. Imagine trying to read that number in flashlights on a distant hill. In the fog. With dirty glasses.

A binary number such as 101010 is like six flashlights in a row. The left one is on, the next one is off, and the rest alternate between on and off. Easy to distinguish and likely if you could see them at all, you could see the number accurately. (If you’re wondering what 101010 is in human, it’s an old friend: 42!)

A binary number such as 101010 is like six flashlights in a row. The left one is on, the next one is off, and the rest alternate between on and off. Easy to distinguish and likely if you could see them at all, you could see the number accurately. (If you’re wondering what 101010 is in human, it’s an old friend: 42!)

So that’s why computers count in binary. It’s purely an engineering decision. Binary is way (way) better for building accurate systems. It’s the same logic as is behind the whole “able, baker, charlie” thing: it makes the information easier to read in worst-case situations.

And it turns out that anything you can do with decimal numbers you can do with binary numbers. The base of the numbering system doesn’t matter at all. And information theory (which we’ll talk about another time) tells us that all information breaks down into bits anyway. Just as all matter is made of particles, all information is made of bits.

Today’s second question is, “What’s up with that counting from zero business?“

Computer people are infamous for counting from zero rather than one, which can seem kind of strange at first. If you count the bottles in a six-pack, you count “0, 1, 2, 3, 4, 5.” The first bottle is zero, which is bad enough, but the last one is five. What happened to bottle six? Who drank my last beer?!?!

Computer people are infamous for counting from zero rather than one, which can seem kind of strange at first. If you count the bottles in a six-pack, you count “0, 1, 2, 3, 4, 5.” The first bottle is zero, which is bad enough, but the last one is five. What happened to bottle six? Who drank my last beer?!?!

But there are some very good reasons for counting from zero. The main one has to do with indexing and, especially, combining indexes. This gets a little technical, so if you’re not interested, you’re excused now. I’ll try not to say anything interesting for the rest of the post so you don’t miss anything.

Imagine you have an array of things in sequentially numbered boxes. We’re talking computer memory, but this works for post boxes, too (assuming you rented a large number of sequentially numbered ones). Let’s assume the number of the first box in the array is #5001 and that you have 100 boxes, so the last one is #5100.

Now suppose you get text message that someone sent a birthday card to the 42nd box in the array. What is the number of that box? It’s easy to just say that it’s box #5042, but what is the formula a computer might use to determine this in general? (Let’s imagine a constant stream of requests to look in the X-th box.)

Now suppose you get text message that someone sent a birthday card to the 42nd box in the array. What is the number of that box? It’s easy to just say that it’s box #5042, but what is the formula a computer might use to determine this in general? (Let’s imagine a constant stream of requests to look in the X-th box.)

The obvious one [first_box + index] doesn’t work. The first box is #5001 and the index we were given is 42, but [5001 + 42 = 5043], which is not the correct box.

But if we index from zero, the first index is zero (not one), which makes the index we were given here 41 (not 42). Now the formula works just fine: [5001 + 41 = 5042]!

Counting from zero also works nicely when you go beyond an array of boxes. A chess board is a two-dimensional grid of boxes (so is an Excel spreadsheet). But computer memory is just numbered boxes in a row, so you lay your grid out as a series of strips in memory. The top row goes first, the second row comes after it, and so on until you reach the last row.

For a chess board, this takes 64 boxes (since a chess board has 64 squares in eight rows of eight). Now suppose your pawn moves to K4. How do you find the right box in your array of 64? Let’s assume that the pawn is on the fourth row down and is the fifth square over (counting from the left).

For a chess board, this takes 64 boxes (since a chess board has 64 squares in eight rows of eight). Now suppose your pawn moves to K4. How do you find the right box in your array of 64? Let’s assume that the pawn is on the fourth row down and is the fifth square over (counting from the left).

In one chess notation, this is square D5, but here we’ll assume the top-left square is 1,1 and the lower-right square is 8,8.

Therefore, our pawn stands on square 4,5. In Excel notation, that would be sheet cell 4E. (And, by the way, yes, I do know white always moves first. The above “move” is just for illustration.)

The problem is, if we want the memory cell address, p-K4 isn’t helpful at all, and D5 isn’t much better. At least 4,5 gives us some sense of where the pawn is on the grid. It’s four rows down and five columns to the right.

And again, the obvious formula doesn’t quite work:

starting_address + (8 * row) + column

That would give us [5001 + 32 + 5 = 5038], and that is not the correct answer.

Remember that the upper-left square is 1,1. That should be the very first memory cell, #5001. But if we use the formula, it gives us [5001 + 8 + 1 = 5010], which is definitely wrong!

If we use a zero-based index, the first square is 0,0, which gives us [5001 + 0 + 0], and that is the first memory box! The zero-based index of the pawn is 3,4. If we plug that into the formula we get [5001 + 24 + 4 = 5029], and that too is the correct answer!

It takes a bit of a mental shift, but it’s one worth making. Some programmers don’t like that loops go from 0 to N-1. They prefer the logic of looping from 1 to N. And in some cases, that’s actually more helpful, but when calculating offsets, you end up subtracting N-1 a lot.

It takes a bit of a mental shift, but it’s one worth making. Some programmers don’t like that loops go from 0 to N-1. They prefer the logic of looping from 1 to N. And in some cases, that’s actually more helpful, but when calculating offsets, you end up subtracting N-1 a lot.

Certain languages where loops specify a start and end number do result in slightly uglier code. Languages that allow a comparison don’t. Compare:

with

As usual the primary rule of source code applies: Do what is most clear.

July 29th, 2023 at 12:00 pm

[…] most machines, the numbers in question are binary numbers, which have just two values, zero and one. The transfer function is thus quantized to have two […]

October 11th, 2023 at 6:20 pm

[…] some time ago, I posted about this on my main blog, but it has occurred to me that, firstly, it might be time for an update, and secondly, that a post […]