This ends an arc of exploration of a Combinatorial-State Automata (CSA), an idea by philosopher and cognitive scientist David Chalmers — who despite all these posts is someone whose thinking I regard very highly on multiple counts. (The only place my view diverges much from his is on computationalism, and even there I see some compatibility.)

This ends an arc of exploration of a Combinatorial-State Automata (CSA), an idea by philosopher and cognitive scientist David Chalmers — who despite all these posts is someone whose thinking I regard very highly on multiple counts. (The only place my view diverges much from his is on computationalism, and even there I see some compatibility.)

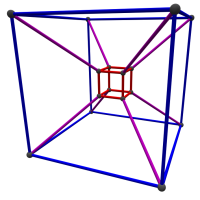

In the first post I looked closely at the CSA state vector. In the second post I looked closely at the function that generates new states in that vector. Now I’ll consider the system as a whole, for it’s only at this level that we actually seek the causal topology Chalmers requires.

It all turns on how much matching abstractions means matching systems.

A side note: A key reason motivating these posts is that I love state-based systems, so Chalmers’ CSA really caught my eye (plus, it’s about computers, which is kinda my thing).

Fairly early in my career as a software designer (a time of youthful obsessions), I fell in love with state-based architecture and used the technique any place I could.

As I’ve mentioned, state-based systems, for all their power in some situations, are kind of a pain in the ass to design and (especially) to maintain. Over time the pain factor caused me to fall out of love with the idea, and now it’s just a tool in my toolkit — because sometimes the benefits are worth the pain.

But that’s why so many posts. 😉

§

There is also that, on some level, so what?

The CSA really doesn’t factor into Chalmers’ thinking all that much. It seems to me more an illustration that attempts to link computation and cognition in a constructive way.

But I found my thinking crystallizing a bit around the CSA concept. It seems to help clarify some of my thoughts about computationalism.

§ §

We begin with two systems:

- FSA:Brain — essentially, the brain.

- FSA:Computer — essentially, the computer.

I say “essentially” in both cases because an FSA is the abstraction (the Turing Machine) that represents the physical object.

They are a description of system behavior. I can’t stress the importance of this enough. An abstraction is just a description of something — it is not the thing!

These descriptions need to be detailed enough to fully capture the behavior of their respective systems. Chalmers specified the neuron level, and computationalism often equates neurons with logic gates, so let’s follow those leads.

Given these systems, we can record the states of neurons or gates during some time period and produce a recording, or listing, of those states during that time.

Let’s notate that like this:

- FSA:Brain ⇒ LIST:Brain

- FSA:Computer(CSA:Brain) ⇒ LIST:Computer

So LIST:Brain is a list of state vectors where neurons are the components, and LIST:Computer is a list of state vectors where logic gates are the components.

These listings are the states of the system that I’ve mentioned before — they are recordings of the system in action.

Note that the computer is running a program, specifically CSA:Brain, so the claim is that both systems, at least in the abstract, go through the same “mental” states. The brain certainly does, but does the computer?

§

The first point here is that:

LIST:Brain ≠ LIST:Computer

The connection between neuron states in the brain and LIST:Brain is one-to-one. The listing is a recording of actual brain neurons involved in cognition. It is a recording of mental states.

But LIST:Computer tracks the operation of logic gates (not the state vector with neuron representations — they need not appear in the listing at all). The computer is executing a computation, so its logic gates reflect the aspects of that computation.

The two listings aren’t the same, because they involve different levels of organization and operation.

LIST:Brain is at the level of neuron activity — the same level we’re presuming mental states occur — whereas LIST:Computer reflects a much lower level simulating neuron activity with numbers.

So what the brain is actually doing and what the computer is actually doing are two very different things.

§

As far as physical causality goes, the brain and the computer function according to a completely different set of causes.

To the extent computationalism equates neurons and logic gates, and then equates the physical causality of the brain with the physical causality of the computer,…

Well, I hope you now see how this is a false comparison. It’s apples and things that aren’t anything like apples.

(It’s also part of why simulated rain isn’t wet.)

§ §

Now Chalmers has said all along the causal topology is reflected in the abstraction the computer executes (not the mere operation of the machine).

The point of the CSA is to quantify that abstraction, to give it a handle we can discuss. It’s pretty obvious, really, that the computer running the CSA and the brain are entirely different systems.

The true physical equivalence is between the computer logic gate states and something low-level in the brain — the neurophysiology that makes neurons work. The logic gates should be seen more as those low-level causal processes than neurons, because the “neurons” in the computer system are abstract.

Everything turns on the idea that, if it can be said the computer performs the same abstraction as the brain does, then there must be functional identity between the systems.

But simulated rain shows this isn’t always so. (In fact, when it comes to modeling the physical world, pretty much all simulated X isn’t Y, so the idea of matching abstractions already seems suspect.)

§

Just how closely can it be said the computer is performing the same abstraction as the brain does?

The brain transitions state to state cleanly all at once. The simulated states have billions of illegal transitory states between legal states.

The brain transitions due to physical causes at the neuron level — that is, the physical causality involved directly participates in moving the system from state to state.

The computer transitions from abstract state to abstract state due to coding and numeric values — that is, due to information — and there is no direct physical causality involved (because that’s at the logic gate level).

As an aside: Scott Aaronson recently wrote:

In other words, a physical system becomes a “computer” when, and only when, you have sufficient understanding of, and control over, its state space and time evolution that you can ask the system to simulate something other than itself, and then judge whether it succeeded or failed at that goal.

Which is interesting to consider in the current context.

It would seem, firstly, that the brain only simulates itself, and secondly, in some sense can’t give a wrong answer (in the sense of being a physical object — cognition, obviously, is wrong at least as often as not).

§ §

So, in the brain, the abstraction level, the description level, is one-to-one with the system operation and at the same level.

In the computer, the abstraction level is high above the system level with a one-to-very-many relationship to system operation.

A crucial point is the degree to which FSA:Brain and CSA:Brain correlate — how the abstraction level is the system operational level. The abstraction and the causality are linked one-to-one.

As far as fBrain, the function that calculates states, it equally involves various points amid much other supporting computation where we say, “at this moment the logic is calculating a given causal relationship.” But that moment is no different than all the other very nearly identical calculations surrounding it.

Amid billions of other moments that, according to LIST:Computer, are indistinguishable from any other momentary system state the computer happens to be in.

This is a crucial point: By looking at LIST:Computer, there is really no way to tell when the system was in any given CSA state. Only a careful analysis of the listing, essentially amounting to running the computation, can tell us that.

Which raises an interesting question: If we take LIST:Computer and manually go through it line by line using the listing to determine memory values (using pen and paper), does the same set of mental states occur?

Would the pen and paper analysis result in the same cognition?

§ § §

There is also the basic idea that, even if we can declare the computer, in some sense, albeit fleetingly and transiently, is going though a set of abstract states identical to the abstract states the brain goes through…

Can we make a claim of identity?

Well,… simulated X isn’t Y, so no?

Really, all this has done is return us to square one. It still depends on whether you hold that that a numeric simulation can be everything it simulates. It turns out the CSA doesn’t change the analysis much.

It also depends on your view of the brain and mind and how that all works.

I do enjoy the mystery of it all. The basic question goes back two thousand years or more. The last couple hundred have seen it examined in greater depth, and very recently it’s examined very closely.

But so far the fundamental questions remain.

§

If a tree falls in the forest…

Stay listed, my friends!

∇

July 17th, 2019 at 11:45 am

(As far as the closing tag, it’s a bit of a pun. A ship can list…)

July 17th, 2019 at 4:27 pm

Richard Brown has an interesting post on his blog that gets into some of the same Chalmers territory, in particular the ‘dancing qualia’ and ‘fading qualia’ arguments.

September 7th, 2023 at 6:54 pm

[…] it’s a life-long diet of science fiction, but I seem to have written some trilogy posts lately. This post completes yet another, being the third of a triplet exploring the […]