As a result of lurking on various online discussions, I’ve been thinking about computationalism in the context of structure versus function. It’s another way to frame the Yin-Yang tension between a simulation of a system’s functionality and that system’s physical structure.

As a result of lurking on various online discussions, I’ve been thinking about computationalism in the context of structure versus function. It’s another way to frame the Yin-Yang tension between a simulation of a system’s functionality and that system’s physical structure.

In the end, I think it does boil down the two opposing propositions I discussed in my Real vs Simulated post: [1] An arbitrarily precise numerical simulation of a system’s function; [2] Simulated X isn’t Y.

It all depends on exactly what consciousness is. What can structure provide that could not be functionally simulated?

Let me start by exploring the two basic concepts:

Structure refers to a composite of parts and the relationships between those parts. Both are equally important.

Structures can be abstract or concrete. Software typically uses lots of abstract data structures, but even a shopping list is a simple abstract structure. Obviously, anything physical has a concrete structure.

Structure can exist at different levels. Atoms have a structure. Building materials (made from atoms) have their own structure, and the building made from them also has a structure. (In fact, usually is a structure.)

Function is an abstraction that’s harder to define precisely. It refers to what something can do.

A mathematical function takes mathematical input(s) and returns a mathematical value. Computer software functions do the same (they are equivalent to mathematical functions).

A tool (concrete or abstract) has a functionality. Its function is to perform a task of some kind: drilling a hole; frying an egg; applying a filter to a photo; correcting your spelling.

Note this is a slightly different flavor of function(ality) compared to a mathematical function. It’s another case of physical function versus numeric processing.

§

Let me also differentiate two other terms:

To emulate is to copy or clone something. If you emulate someone, you try to copy their behavior.

A software emulation tries to provide everything that what it emulates does. For example, a Windows emulation running on MacOS tries to, for all intents and purposes, be Windows to the apps it runs.

To simulate is to describe or represent something.

Any virtual reality is a simulation that represents a real or imagined scene. A weather simulation describes how the weather behaves.

This seems clearer when we’re not talking about brains and minds. Consciousness tends to complicate any discussion. (Ironically, one of the first requirements for any discussion is possessing consciousness.)

We can ask two questions: What does an emulation of consciousness entail? Or, failing to emulate, can a simulation describe consciousness effectively?

[As I’ve said before, I believe a physical isomorph of the brain (an emulation of structure) could work. I think of this as the “Positronic Brain” due to Asimov.]

§ §

Consider traditional gas-engine cars versus electric cars.

To the extent their function is to provide short-range transportation, which is probably the primary application, there is no real difference.

But their functional differences become apparent the more we examine them, and the more contexts we place them in. In some venues, one may perform noticeably better than the other. Electric motors have many advantages over gas combustion engines, the gas has a few advantages of its own.

This isn’t a good example, because here we’re really talking about an emulation. An electric car emulates a gas car. (A video game simulates a car. No simulation can provide transportation.)

Metaphorically, an electric car is the Positronic Brain compared to a gas car’s biological one. It’s a physical isomorph, at least at the car level.

§

Which brings up an important point about functional analysis: The granularity matters — sometimes a lot.

Gas and electric cars function the same (in their common context) as cars, but many of their internals differ greatly. That’s kind of the whole point with electric cars — they don’t have gas engines.

So, the level of “black box” functionality is important.

One issue with brains is that we don’t know what functional unit is important. Where can we replace a gas engine with an electric one?

For instance, is the neural network (including synaptic behavior) enough, or are things like myelin sheathing and glial cells factor also vitally important? (I think the question is more, how can they not matter.)

On the other end of the spectrum, a black box brain — something that is nothing at all like a structural isomorph. Something with nothing in common with a brain, but which acts like one.

A full functional replacement, but not a structural one.

§

That certainly isn’t going to work with cars due to their specific physical requirements. But the brain can be viewed as a black box that connects to the body via the nervous system.

Is an alternate structure that faithfully replicates the function at that level possible? Can a mind arise in something that isn’t a structural isomorph?

Specifically, can a numerical simulation calculate mind?

It’s important to ask that question in three contexts:

- A physical simulation of the brain

- A composite functional emulation of the brain

- A unified “mind” algorithm

An interesting aspect of [1] and [2] is that those don’t require a full understanding of how consciousness, or even just the brain, works.

This is especially true of [1]. We just need to simulate the physical structure at some appropriate level. The neuron level almost certainly won’t cut it, but something higher than quantum might (unless mind really does depend on quantum effects).

In Stephenson’s Fall, they used lots of powerful quantum computers to simulate reality at the quantum level. From their point of view, it was just a quantum system.

The middle option, [2], requires some understanding, but it also offers an investigative approach. By simulating selected brain functions, scientists can explore how well those might work.

Models using semantic vectors, for instance, can offer insights into how our minds work without necessarily duplicating the mechanism.

But [3] — assuming it actually exists — does require a unified theory of mind, a full understanding of consciousness.

Both of the second options are asking conventional computing to emulate decidedly unconventional computing. One way around the challenge is to assume the brain’s computing is actually more conventional than it appears, that the brain really is a Turing Machine in disguise.

The third option absolutely assumes this. For a “mind algorithm” to exist, the brain/mind system has to already be a discrete symbol processing machine.

§ §

So, let’s assume one of those options seems to work. We have a black box that, hooked up to inputs and outputs appears conscious and responsive.

Is it conscious? How could we tell? What do we even mean by the question?

Alan Turing suggested we talk to it, and while that has problems, no one has really come up with anything better. (Part of what confounds this is lack of a clear definition of consciousness in the first place.)

I like the idea of a Rich Turing Test: It involves prolonged interaction over time; an exploration of likes and dislikes, opinions, ideas, humor, and curiosity. (That last one is huge to me.)

With a human (or similar) consciousness, I’d expect to explore tastes in music, movies, books, food, sports, hobbies, and friends.

So, what about a system that reports or claims it has these qualities and seems to demonstrate them, especially over time?

Would we take its word that it was just as conscious as we are?

§

We already respond to robot dogs (and name our cars), so from a social point of view, chances are we’ll love’m like waffles. (Who doesn’t love waffles?)

At the very least we need to ask some serious questions about what consciousness actually is. (It bemuses me severely that all these people study consciousness without agreeing on a definition.)

I think the real question is whether such functionality is even possible.

For now, at least, it begs the question to ask if a black box that acts conscious really is conscious, because no such black boxes are in evidence, nor do we have clue one about how to make one.

§

John Searle made (or tried to make) the point that canned responses don’t strike us a truly modeling consciousness, and further that a numerical simulation of consciousness is such a canned system.

I think that’s partially right, but it overlooks things like memory and process. Searle’s Giant File Room, as presented, is just a lookup system. Brains do more than look things up.

Therefore, any functionality we simulate must include self-reflection, multi-level meta-thinking, and self-modifying code.

The bottom line for me regarding functionality is that we simply don’t know what might be missing or how it might be missing. There is a tension between those two propositions: simulated functional results (which are always numbers) versus physical structural results (which can be weather, laser light, bridge traffic, a growing tree, or whatever).

Will those numbers tweak those nerves in the right way?

What I’m saying is that we have a long road ahead to get to that black box. (If it can be gotten to at all. That remains to be seen.)

§

Maybe ultimately, in these technological gender — even person — fluid times we just treat all black boxes as ducks.

If it walks, talks, quacks, and flies, like a duck, then introduce it to some tasty orange sauce.

Stay structural, my friends!

∇

January 7th, 2020 at 10:35 am

As I noted last night, what I’d like to see for structure theories is some rational for exactly what in particular the structure is and why it’s essential. We can say that it’s because the only things we know to be conscious are ones that have that structure, but that was also true about a lot of other things (playing chess, Go, Jeopardy, driving, etc) until it wasn’t. Something needs to be identified that plausibly can’t be replaced with equivalent functionality.

Understanding that there are definitely things that fall into one category or another, is there a sharp line between an emulation and a simulation? If so, what would you say it is?

“For a “mind algorithm” to exist, the brain/mind system has to already be a discrete symbol processing machine.”

How would you distinguish a mind algorithm from things like thermostat algorithms, or music player ones? When digital computers gradually took over the role of the old analog computer systems, were they taking over something for which a discrete symbol processing machines already existed? Don’t we have a long history of creating algorithms to replace processes that weren’t previously discrete symbol systems?

“It bemuses me severely that all these people study consciousness without agreeing on a definition.”

That’s the thing. I think it reveals that what we call “consciousness” isn’t just one thing, but a collection of processes. If some portion of them are there, our intuition of consciousness will be triggered, although people will argue endlessly about exactly which are necessary and sufficient, and which are optional.

“For now, at least, it begs the question to ask if a black box that acts conscious really is conscious, because no such black boxes are in evidence, nor do we have clue one about how to make one.”

This seems somewhat correct but an overstatement to me. We definitely can’t make one yet, although we can make ones for functional fragments, such as playing chess, etc. And the various GWTs and other theories at least point in potential directions. Dehaene discussed simulations in his book that exhibited workspace dynamics, albeit without the rich collection of consumer processes necessary to compose something we’d be tempted to regard as conscious.

I’m on board with the rich Turing test. My only qualm is that the original test was about telling whether or not an entity is human, rather than whether it’s conscious. Given our intuitions about animals, it seems possible that, at some point, our intuitions of consciousness are going to be triggered for systems that can’t yet pass the strict Turing test, rich or shallow.

January 7th, 2020 at 11:45 am

Some interesting points and questions here!

“Something needs to be identified that plausibly can’t be replaced with equivalent functionality.”

Yep, that’s the goal. What’s confounding is not knowing what consciousness is, so it’s hard to say what might exist in a physical structure that doesn’t in a simulation.

I keep thinking about it in the context of my laser light analogy. Clearly it takes a specific physical structure to emit such light, and while a simulation can describe that happening very well, it can’t produce actual photons. So, per consciousness, what might be the “photons” here that can only arise from physical structure?

I have no idea. But I’m working on it. 😉

Photons are actual physical things, which we can detect, and which have a clear effect on other physical things. Per my analogy, that suggests consciousness must have some physical manifestation and measurable effects on the world.

Well consciousness obviously does have measurable effects on the world (if we deny epiphenomenalism), so we’re good there. Might the “photons” be, in part, the brain waves we can detect? Might there be some (physical, measurable) “ghost” in the machine — the “standing wave” of a conscious mind that I’ve mentioned in the past?

Similar to how the atomic behavior in a lasing material combines to form a high level system that emits coherent light, might the billions of neurons in our brains combine to form a high level system that emits coherent thought?

Which still leaves the really interesting question about what a simulation would do. What would it mean to describe simulated “thoughteons” in action? A simulation can describe a laser cutting steel. Would a mind simulation describe a mind doing something?

I have no idea. 😀

“…is there a sharp line between an emulation and a simulation?”

Never thought about it… generally speaking similar ideas do have fuzzy boundaries…

My gut reaction is there is a sufficiently clear distinction to differentiate most cases, but I’d have to play a game of “what about this?” to check my thinking. Some of it involves how one thinks about those words.

Is an simulation a kind of emulation? One could validly claim so. Is an emulation a kind of simulation? Also a valid claim. There’s an aspect of dealer’s choice here.

I tend towards more restrictive word definitions — I like the precision and texture that grants. I lean towards seeing them as exclusive concepts (as I defined them in the post). For me the sharp line involves isomorphism, I think.

From the perspective of a Windows app, running in actual Windows or in an emulator should be 100% indistinguishable. (Similar to how (on a small scale) gravity and acceleration are indistinguishable — we could say acceleration “emulates” gravity.) To the app, Windows and the emulator are exactly isomorphic.

But a simulation always has a tell — sometimes a huge one. The whole “simulated X isn’t Y” thing is a big tell.

“How would you distinguish a mind algorithm from things like thermostat algorithms, or music player ones?”

Another intriguing question! Hmmm…

Thermostats always were analog-to-digital converters, so on the back end they were discrete symbolic systems. On the front end, they deal with a temp continuum, which is obviously analog. Replacing that with a chip and algorithm doesn’t really change anything, I think. It still needs to process the analog temp and made a go/no-go decision.

I would not call analog music systems discrete symbol processors, and digital music systems definitely replaced them. There are similar changes in video systems.

Calculators, going back to abacuses, always were discrete, too. I don’t know much about analog computing; I don’t know to what extent digital calculations have replaced it or not. Some things (resistance networks, for example) might be computationally intense, but easily solved with an analog network.

But these more involve the second option — replacing the analog brain with digital computing that does the same functions without the same internals (as with digital music or video).

A mind algorithm is a Holy Grail of sorts — the assumption that what goes on in our minds really is an algorithm. There is no comparison with thermostats or music — we never saw those things as algorithmic in the first place.

In fact, I think the mind algorithm is a chimera — I don’t think brains are algorithmic any more than anything physical is.

“If some portion of them are there, our intuition of consciousness will be triggered,”

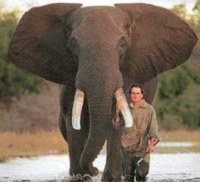

That’s a good point; I hadn’t looked at it that way. It really is the elephant and blind men.

That said, there is also an irreducible aspect that might be a foundation. The problem is that we’re all still too blind to grasp the elephant.

“This seems somewhat correct but an overstatement to me.”

Ha! 😀

“My only qualm is that the original test was about telling whether or not an entity is human,…”

Good point. There are two questions in play: [1] Does this putative black box have a human intelligence? [2] Is this black box conscious?

The second is, I think, more general and would apply to animals and aliens. It ties in with the whole “what is consciousness” question. I see the former as more about testing a system we’ve built to see if it reaches the goals we’ve set for it.

So I see the RTT as having value in that testing of human-built systems, but really none at all when it comes to animals or aliens.

January 7th, 2020 at 12:44 pm

The more I think about that underlying algorithm business the more I think it’s a whole separate topic. At least in some sense there is an underlying algorithm for a thermostat — If (temp-condition) Then {do-something} Else {don’t}.

But music and most other physical processes are a different matter… probably worth their own post. Or at least a separate comment thread. I need to ponder the matter a bit…

January 7th, 2020 at 12:51 pm

One initial thought I have is that these are two different things: [1] An underlying algorithm a system implements; [2] An algorithm that describes a system’s behavior to identical (or even better) precision.

I’m thinking music is the second case…

January 7th, 2020 at 1:26 pm

I’m seriously thinking this is a post-worthy topic…

January 7th, 2020 at 7:08 pm

On emulation, one of the interesting things about that term is it’s often applied to software emulating hardware, such as terminal emulators for connecting to legacy systems, or emulators running old Atari 2600 games. That seems like a case where it could be argued that the emulation is definitely not the original thing, since there’s no actual hardware there. But because it runs on other hardware to interface with the world, it works.

Maybe the dividing line is an emulation is in a framework which allows it to have the same causal effects in the environment that the original had. Of course, when we switch to minds, the question becomes, what happens when we put a simulation of a mind in a robot body (new hardware)?

“But these more involve the second option — replacing the analog brain with digital computing that does the same functions without the same internals”

You’re totally right. I didn’t parse the options you listed well enough on my first pass this morning. Sorry! My bad.

“So I see the RTT as having value in that testing of human-built systems, but really none at all when it comes to animals or aliens.”

One of the things that I think is most likely, is that long before we build a human level general intelligence, we’re going to build a fish level, frog level, mouse level, or dog level intelligence. It might be worth thinking about what kind of tests we could use for those types of systems.

On the algorithms, sounds like maybe some interesting posts coming.

January 7th, 2020 at 9:28 pm

“That seems like a case where it could be argued that the emulation is definitely not the original thing,”

It isn’t a requirement an emulation be the original thing but to be (as indistinguishably as possible) like the original thing. From the point of view of the Atari game, it would “think” it’s running on Atari hardware so long as all its software expectations were met. Likewise, legacy systems “think” they’re talking to legacy terminals.

There’s an interface between the emulation and its consumer — so long as that interface is fully emulated, the consumer is none the wiser.

(Or, if knowing, doesn’t see a difference to care about. For instance, if an electric car is seen as an emulation of a gas car, it’s known the two are different, but since they have the same interface — they both act like cars — the difference doesn’t matter.)

“Maybe the dividing line is an emulation is in a framework which allows it to have the same causal effects in the environment that the original had.”

Yeah, that works. Having the same causal effects involves being an isomorphism regarding those effects.

“…what happens when we put a simulation of a mind in a robot body…”

It begs the question to assume we can simulate a mind, so it’s a hard question to answer.

It does raise the interesting topic of how important a body (or belief one has a body) is to consciousness. Can consciousness be disembodied?

“It might be worth thinking about what kind of tests we could use for those types of systems.”

Yeah, good point. We use physical behaviors of animals to have the beliefs we do about them.

If you recall that “hide and seek” AI experiment, it provided a way to observe the behavior of the algorithms (in a really cute way). I suppose we’ll use some version of observation and testing there as well.

That said, my beliefs about dogs come from looking in their eyes and interacting with them on a very close level. Some of that would be missing from interaction with an AI.

“On the algorithms, sounds like maybe some interesting posts coming.”

😀 Already scheduled for 6:28 AM tomorrow!